gRPC – JSON Transcoding with ASP.NET Core and More – Features and Manual

Transcoding JSON to gRPC

A powerful Remote Procedure Call (RPC) framework is gRPC. To build high-performance, real-time services, gRPC uses message contracts, Protobuf, streaming, HTTP/2, and those technologies.

The incompatibility of gRPC with all platforms is one of its drawbacks. Because HTTP/2 isn’t completely supported by browsers, REST APIs and JSON are the main methods for supplying data to browser apps. REST APIs and JSON are crucial in current apps, notwithstanding the advantages that gRPC offers. The development of gRPC and JSON Web APIs adds needless complexity.

What is gRPC JSON?

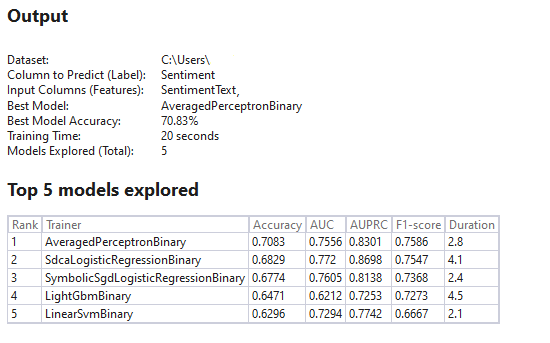

An add-on for ASP.NET Core called gRPC JSON transcoding produces RESTful JSON APIs for gRPC services. Once set up, transcoding enables apps to use well-known HTTP principles to call gRPC services:

- HTTP verbs

- URL parameter binding

- JSON requests/responses

About JSON transcoding implementation

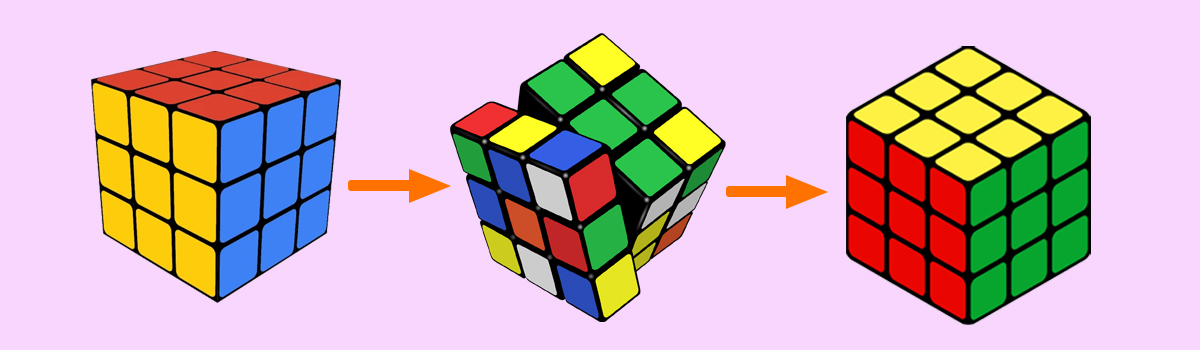

The idea of using gRPC and JSON transcoding is not new. Another tool for converting gRPC services into RESTful JSON APIs is the grpc-gateway. Let’s take a closer look at how it works.

It employs the same. proto annotations for gRPC services that map HTTP concepts. The key distinction is in how each is put into practice.

Code generation is used by grpc-gateway to build a reverse-proxy server. RESTful requests are converted by the reverse-proxy into gRPC+Protobuf and sent via HTTP/2 to the gRPC service.

In my opinion, transcoding has improved and the advantage of this strategy is that the gRPC service is unaware of the RESTful JSON APIs. Grpc-gateway can be used with any gRPC server.

An ASP.NET Core app is doing gRPC JSON transcoding in the background. After converting JSON into Protobuf messages, it directly calls the gRPC service. We think JSON transcoding benefits.NET app developers in a number of ways:

- Less moving components One ASP.NET Core application manages both mapped RESTful JSON API and gRPC services.

- Deserializing JSON to Protobuf messages and directly using the gRPC service are both done by JSON transcoding. When compared to initiating a fresh gRPC call to a separate server, performing this in-process offers substantial performance advantages.

- Smaller monthly hosting bill due to fewer servers.

MVC and Minimal APIs are not replaced by JSON transcoding. It is quite opinionated about how Protobuf maps to JSON and only supports JSON.

Mark up gRPC methods:

Before they support transcoding, gRPC methods need to be annotated with an HTTP rule. The HTTP rule specifies the HTTP method and route as well as details on how to call the gRPC method.

Lets take a look on the example below:

service Greeter {

rpc SayHello (HelloRequest) returns (HelloReply) {

option (google.api.http) = {

get: "/v1/greeter/{name}"

};

}

}

The following illustration:

- Establishes a SayHello method for a Greeter service. The name google.api.http is used to specify an HTTP rule for the method.

- The GET method is reachable via the /v1/greeter/name> route and GET requests.

- The request message’s name field is linked to a route parameter.

Streaming techniques:

Streaming in all directions is supported via conventional gRPC over HTTP/2. Only server streaming is permitted without transcoding. Bidirectional streaming methods and client streaming are not supported.

Server streaming techniques employ JSON that is line-delimited. A new line follows each message published using WriteAsync, which is serialized to JSON.

Three messages are written using the server streaming technique below:

public override async Task StreamingFromServer(ExampleRequest request,

IServerStreamWriter<ExampleResponse> responseStream, ServerCallContext context)

{

for (var i = 1; i <= 3; i++)

{

await responseStream.WriteAsync(new ExampleResponse { Text = $"Message {i}" });

await Task.Delay(TimeSpan.FromSeconds(1));

}

}

Three line-delimited JSON objects are sent to the client:

{"Text":"Message 1"}

{"Text":"Message 2"}

{"Text":"Message 3"}

Please take note that server streaming methods are not covered by the WriteIndented JSON setting. Line-delimited JSON cannot be utilized with pretty printing since it introduces new lines and whitespace.

HTTP protocol

The.NET SDK’s ASP.NET Core gRPC service template builds an app that is only set up for HTTP/2. When an app only supports conventional gRPC over HTTP/2, HTTP/2 is a good default. However, transcoding is compatible with both HTTP/1.1 and HTTP/2. Some platforms, including Unity and UWP, are unable to use HTTP/2. Configure the server to enable HTTP/1.1 and HTTP/2 in order to support all client apps.

Change the appsettings.json default protocol:

{

"Kestrel": {

"EndpointDefaults": {

"Protocols": "Http1AndHttp2"

}

}

}

The protocol negotiation required for enabling HTTP/1.1 and HTTP/2 on the same port uses TLS. See ASP.NET Core gRPC protocol negotiation for further details on setting up HTTP protocols in gRPC apps.

gRPC JSON transcoding vs gRPC-Web

The ability to call gRPC services from a browser is provided by both transcoding and gRPC-Web. Let’s take a look at the main differences between the two, everyone goes about it in a different way:

- With the help of the gRPC-Web client and Protobuf, gRPC-Web enables browser apps to call gRPC services from the browser. The advantage of delivering brief, quick protobuf messages with gRPC-Web is that it necessitates the browser app to construct a protobuf client.

- Transcoding enables browser-based applications to use gRPC services as if they were JSON-based RESTful APIs. The browser app does not need to create a gRPC client or be familiar with gRPC in any way.

JavaScript APIs in browsers can be used to invoke the prior Greeter service:

{

"Kestrel": {

"EndpointDefaults": {

"Protocols": "Http1AndHttp2"

}

}

}

Recent Comments