Infrastructure As Code Tools Role│Best IaC Tools

Why Infrastructure As A Code Tools Used In Cloud Platforms?

IaC is a kind of methods used to control and describe centers of data processing with data sets for configuration rather than using manual methods of editing configurations on servers or interrelationships of infrastructures. Normally, the declarative method or imperative algorithm is used in writing code for operating with infrastructures.

Infrastructure as code (IaC) is a common set of tools used in cloud computing. The core principle of the IaC is to describe an infrastructure with a code in combination with an ordinary software development procedure. It is the main practice of developers and a component of a continuous software supply. IaC allows DevOps to work as a team promptly, reliably, and on large scales. They can use a single set of methods and tools to develop programs and servicing infrastructure.

From Hardware to Cloudformation tools

Many people probably no longer remember the iron age when we had to buy our own servers and computers. At that time no one had any idea what tools for cloud formation were. Now it already seems crazy when the hardware buying cycle can limit the infrastructure growth. A new server used to be delivered and installed for weeks! The software was available to developers many days after the hardware was installed.

The first cloud computing methods appeared only in the middle of the 2000s. This made it possible to run new instances of virtual machines quickly and brought businesses and developers not only benefits but also problems. First and foremost they had to maintain an increasing number of servers. However, these were still far from IaC tools.

Following that, only some large computers began to be replaced by smaller ones and the area of the infrastructure of an average engineering center began growing and became more cyclical. Ops had to support more and more things. In order to cope with the peak load, it was necessary to make up or down scaling at different times of the day.

To increase efficiency, many pickets had to be created in the morning to achieve the maximum power and many also at night to reduce that power. The whole process had to be managed manually, which became a challenge over time.

All abovementioned was the reason for the creation and introduction of the infrastructure as code tools. This allowed the systematization of the listed above task maximally. IaC solution made the management of the data processing centers and servers very sufficiently with the help of data readable by computers. They became an alternative to physical equipment and tool configuration under human supervision.

Amazon Web Cloud Formation Service (AWS) was the first tool that emerged in 2009. It became one of the best tools for DevOps allowing engineers to create versions of infrastructures as quickly as a normal code can make it as well. And that allows tracing the resulting versions of infrastructures in order, to make environments enough consistent.

Well-known Infrastructure As Code Tools

The TOP IaC tools that become famous recently among developers are:

- Terraform IaC

- Amazon Web Service Cloudformation tools (AWS)

- Azure Resource Manager

- Ansible

- Chef

- Puppet

- Pulumi

- Saltstack

- Google Cloud Deployment Manager

- Vagrant

- Crossplane

Now, we would like to compare all aforementioned infrastructure as code tools to understand what similarities or differences they have concerning the application area, writing method, or languages.

Tool

Terraform

AWS CloudFormation

Azure Resource Manager

Google Cloud Deployment Manager

Pulumi

Ansible

Chef

Puppet

Crossplane

Vegrant

Saltstack

Method

Push

n/d

n/d

n/d

Push

n/d

Pull

Pull

n/d

n/d

Push/Pull

Approach

Declarative

Declarative

Declarative

Declarative

Declarative

n/d

Declarative and imperative

Declarative

Declarative

Declarative

Declarative and imperative

Language

HashiCorp Configuration

YAML or JSON

Azure

YAML or Python

Typescript, Python, or Go

YAML

Ruby

Ruby

YAML

Ruby, PHP, C#, Python, Java, JavaScript

Python

Applied for

Web and cloud formation services

Amazon Web Services

Access Control based on Role

Google Cloud resources and platforms

Azure Cloud services, WS, GCP

Users of modules and plugins

Cloud providers and web services

Cloud platforms and web services

Almost all cloud providers present on the market, architecture and cloud field

Engineers preferring few virtual PCs to big cloud-based infrastructures

Universal tool, fits any platform

Main Infrastructure As Code Tools Tasks

At the present time, it is hard to imagine the work of major providers and services without a cloud automation tool application. A wide range of IaCs is dedicated to helping IT engineers to solve such challenges as:

- Deployment

- Instrumentation

- Configuration

- Provisioning

Earlier IT specialists set, configured, and updated software for cloud servers manually. Team participants stored and configured data also with the same method. It took much time and required the attraction of additional developers and influenced significantly the increase in expenses.

IaC infrastructure as code became a solution for professionals in addressing such problems as additional expenses for salary payment and solving problems with the scalability.

It is worth being aware that some IaC tools are already set inside the settings of the infrastructure and other kinds of tools manage applications and infrastructure in the environment.

Below, we would like to give a few words about AWS infrastructure as code and its advantages.

AWS Infrastructure As Code Advantages

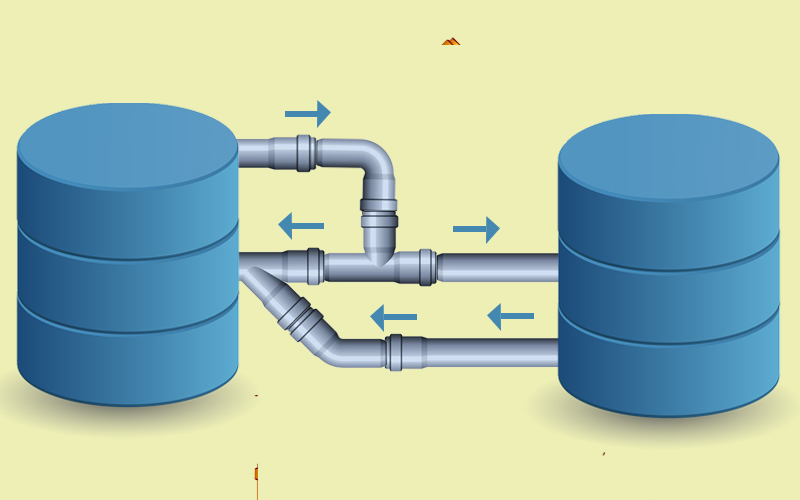

The IaC is aimed at the provision and management of cloud resources using a template read by people, which can be consumed by machines in an easy way. AWS Cloud formation is considered a reliable solution to the DevOps cloud services, which uses the IaC for Amazon Web Services.

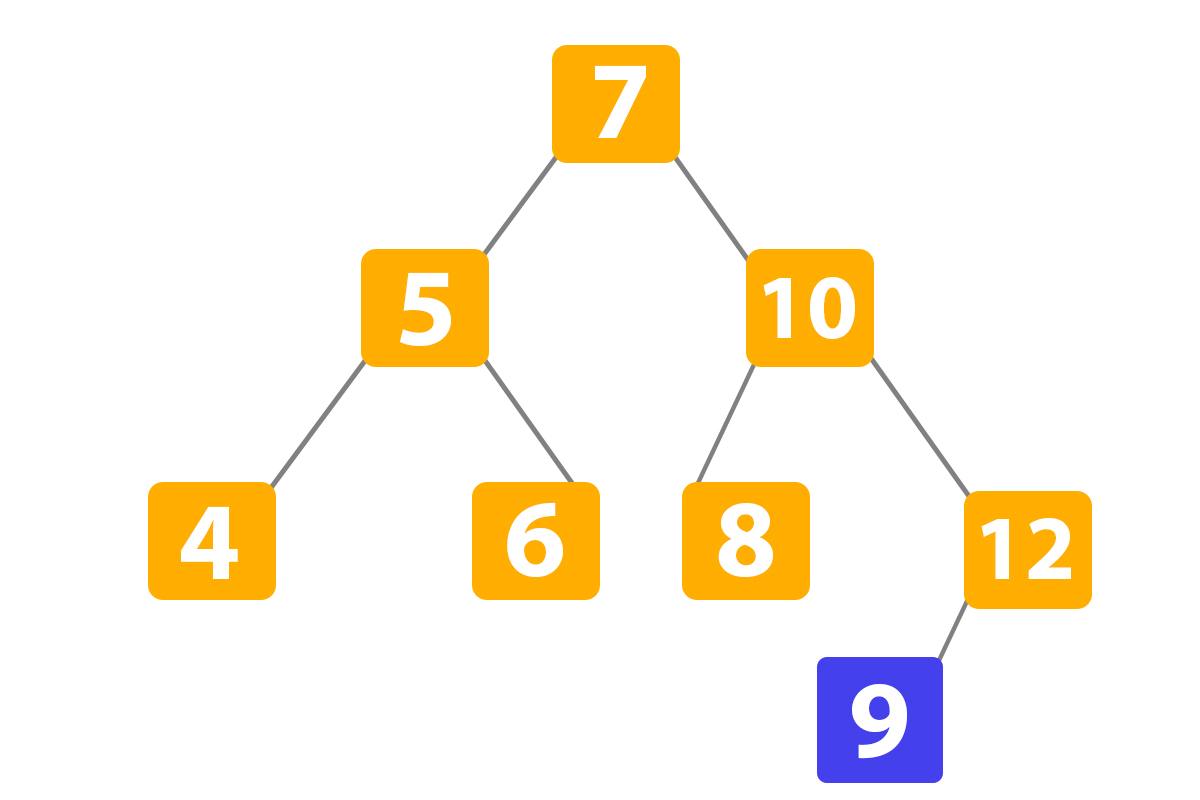

AWS type of cloud formation enables creating a personal account of a user on Amazon Web Services using the description requested by a user. Then, this description is realized upon request. A typical infrastructure as code example includes a fragment of the template, which describes the creation of resources for the Amazon Elastic Computed Web Services using YAML.

Therefore, when we create the code, we indicate the AWS, then ECS and Service gradually as a Type, then put the “Discovery of the service” as the Dependence, also indicate in the properties the “App” as a name, “Production” as the cluster, 200 maximal percent and 75 minimal percent in the deployment configuration and set 5 as the number of counts.

AWS cloud formation tools then take the template and after that becomes responsible for the creation, updating, and removing resources on a user’s Amazon Web Service account depending on the content of this template. If a user wants to add a new resource to his file, the Cloudautomation tool builds this resource in his account. In case this user wishes to update his recourse, the tool can update or replace all current existing resources. If a user wants to delete this resource from his template, it will be vanished or be removed from his account.

Tools IaC provides users many pros:

They are visible:

An IaC template plays a big role as a precise reference on what kinds of resources you have on your account and their indicators. To check settings there is no need to follow the web panel.

They are scalable:

You can write the infrastructure as a code one time and then use it multiple times. It means that you can use just a good quality template as the basis for different services in various areas of the world, which significantly simplifies horizontal scaling.

They are stable:

If a wrong parameter or a wrong resource has been removed from the web panel, you can break everything. IaC tools solve this problem, especially in combination with Git versions for control.

They have transaction ability:

Cloudformation tools can not only create resources in your AWS account, but they also wait until their stabilization during the starting process. IaCs check for a successful initialization and in case of any failure, they can roll carefully the infrastructure back to the previously known good condition.

They are secure:

It can be seen that the provides of IaC again you with a single template to deploy your architecture. As soon as your protected architecture has been created, you can use it many times and you will know that each deployed version can have the same settings.

Conclusions

IaCs are very popular instruments of the new generation introduced at the beginning of the new century to make the process of cloud service formation, deployment, and adjusting of the infrastructure easier using just a code. There is no need to make manual settings, which significantly simplified the tasks of developers and solve a problem with scalability as well. Terraform and its closest analog Pulumi is considered the most common tools used for cloud formation.

FAQs

What problems do the infrastructure as code tools solve?

IaC tools are developed to fight such inconveniences as manual configuration of software for cloud and services. Engineers who didn’t have IaC were maintaining the settings of each environment of deployment separately. With time, each environment itself becomes a kind of unique configuration. Professionals call it a “snowflake”. Therefore, they cannot be reproduced automatically.

When environments do not fit each other, it causes problems with deployment. Administration and support of the infrastructure are always associated with manual adjusting leading to some errors, which are difficult to track. IaC tools enable avoiding a configuration process manually and make the environments consistent ensuring the desirable conditions for them with a qualitative code.

Why Terraform is always number one among the IaC tools?

Terraform is considered the best tool for DevOps and the most demanded in the market. It is an open-source IaC solution, which is very flexible and can support all the most promising and safe cloud services, such as Azure, GCP, or AWS.

It can also maintain many various cloud providers and manage them within a single workflow as it may destroy resources while sources are saved.

Terraform is considered a very cost-saving infrastructure as a code instrument as it is open-source by its nature and possesses a great range of quality tools and scripts.

What is the best Terraform alternative tool?

There are many tools IaC that are similar to Terraform in their approaches, usage, and description methods, which are commonly used in the Cloudformation platforms. However, it is worth highlighting the Pulumi IaC tool. Many specialists state that it has the same ability to create, manage and deploy infrastructure on any cloud. It is free and open-source like Terraform.

Recent Comments