.NET 7 Has Already Been Released! What’s New?

What’s new on .NET 7

.NET is Microsoft’s framework for creating and developing various apps, just to give you a brief introduction. The fact that this platform is cross-platform, open source, and free is one of its key characteristics.

You may create everything from desktop programs (for different operating systems like as Windows, macOS, or Linux) to mobile applications (for Android or iOs), web apps, IoT software, or even games (using the Unity engine based on C#) with the.NET platform.

The following version of.NET, generally known as dotnet 7, will be made available by Microsoft by the end of 2022.

.NET 7 Enhancements – What’s New in.NET 7?

Faster and lighter applications (Native AOT)

Native AOT (Ahead-of-time) is another of the new features and improvements introduced by Microsoft in .NET 7. After a while, Microsoft’s development was centered on the experimental Native AOT project. Microsoft has decided to bring us a couple of updates to Native AOT, as many of us have been asking for a long time.

For those who are unfamiliar with Native AOT, Ahead-of-time (simply AOT) generates code at compile-time rather than run-time.

Microsoft currently provides ReadyToRun, also known as RTR (client/server applications), and Mono AOT (mobile and WASM applications) for this purpose. Furthermore, Microsoft emphasizes that Native AOT does not replace Mono AOT or WASM.

Native AOT is distinguished by its name: it generates code at compile time, but in Native. Its main advantage, according to Microsoft, is improved performance, particularly in:

- Time to begin

- Memory consumption

- Size of the disk

- Platform access is restricted.

How does Native AOT work

Microsoft explication: “Applications begin to run as soon as the operating system pages them into memory.” The data structures are designed to run AOT generated code rather than compile new code at runtime. This is how languages such as Go, Swift, and Rust compile. Native AOT works best in environments where startup time is critical.”

Observability

Microsoft also improves support for the cloud-native specification (OpenTelemetry). Although it is still in development in.NET 7, Allow samplers to modify tracestate and Samplers should be allowed to modify tracestate have been added. Here’s an example from Microsoft:

// ActivityListener Sampling callback

listener.Sample = (ref ActivityCreationOptions<ActivityContext> activityOptions) =>

{

activityOptions = activityOptions with { TraceState = "rojo=00f067aa0ba902b7" };

return ActivitySamplingResult.AllDataAndRecorded;

};

Shortened startup time (Write-Xor-Execute)

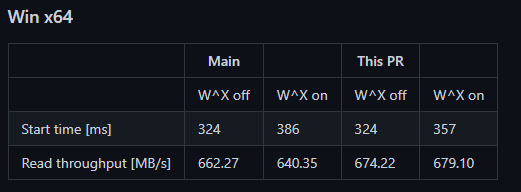

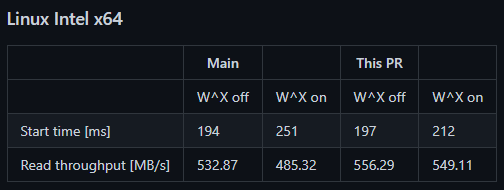

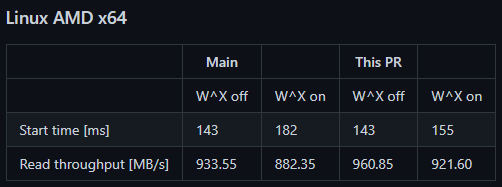

As we saw at the outset, Microsoft has decided to prioritize performance enhancements, and this is no exception. With Reimplement stubs to improve performance, we have seen an improvement in startup time of up to 10-15%, according to Microsoft.

This is primarily due to a significant decrease in the number of modifications made after code creation at runtime.

Let’s take a look on the benchmarks:

Containers and Cloud Native

The Modern Cloud is another new feature described by Microsoft. As previously stated, cloud-native applications are typically built from the ground up to take advantage of all the resources of containers and databases. This architecture is excellent because it enables easy scaling of applications through the use of autonomous subsystems. This, in turn, lowers long-term costs.

What .NET will bring is the ease of creating cloud-native applications, as well as various improvements to the developer experience — thank you, Microsoft, for thinking of us.

Furthermore, it will greatly simplify the installation and configuration of authentication and authorization systems. And, as always, minor performance enhancements at application runtime.

Containers and Cloud Native

The Modern Cloud is another new feature described by Microsoft. As previously stated, cloud-native applications are typically built from the ground up to take advantage of all the resources of containers and databases. This architecture is excellent because it enables easy scaling of applications through the use of autonomous subsystems. This, in turn, lowers long-term costs.

What. NET will bring is the ease of creating cloud-native applications, as well as various improvements to the developer experience — thank you, Microsoft, for thinking of us.

Furthermore, it will greatly simplify the installation and configuration of authentication and authorization systems. And, as always, minor performance enhancements at application runtime.

It is easier to upgrade .NET applications

Migrating older applications to.NET 6 has not been the easiest thing in the world, as we all know — and some have suffered through it. That is why Microsoft is releasing new upgrades for older applications. The main points are as follows:

- Additional code analyzers

- More code checkers are needed.

- Checkers for compatibility

All of this will be accompanied by the .NET Upgrade Assistant, which will greatly assist in upgrading those applications while saving the developer time — thank you, Microsoft.

Hot Reload has been improved

My favorite.NET 6 feature will be updated in .NET 7. C# Hot Reload will be available in Blazor WebAssembly and .NET for iOS and Android.

Microsoft has also added new features such as:

- Static lambdas are being added to existing methods.

- Adding lambdas to existing methods that already have at least one lambda that captures this

- Adding new non-virtual or static instance methods to existing classes

- Making new static fields available in existing classes

- Introducing new classes

API updates and enhancements

System.Text.Json contains a few minor quality-of-life enhancements:

- Include a JsonSerializerOptions section.

- Include a MaxDeph property and ensure that it is derived from the corresponding value.

- Patch methods have been added to Net.Http.Json.

This was not possible in previous versions, but with the additions to System.Text.Json, serialization and deserialization of polymorphic type hierarchies is now possible.

Let’s look at the Microsoft example:

[JsonDerivedType(typeof(Derived))]

public class Base

{

public int X { get; set; }

}

public class Derived : Base

{

public int Y { get; set; }

}

Activity.Current New Standard

The most common way to achieve span context tracking of the different threads being managed in .NET 6 is to use AsyncLocal<T>.

Jeremy Likness stated in his post Announcing .NET 7 Preview 4:

“…because the context is tracked via Activity.Current, it is impossible to set the value changed handler with Activity becoming the standard to represent spans, as used by OpenTelemetry.”

We can now do this with Activity.CurrentChanged to receive notifications. Consider the following Microsoft example:

public partial class Activity : IDisposable

{

public static event EventHandler<ActivityChangedEventArgs>? CurrentChanged;

}

In date/time structures, microseconds and nanoseconds are used

The “tick” was the smallest time increment that could be used, and its value is 100ns. The issue was that in order to determine a value in microseconds or nanoseconds, you had to calculate everything based on the “tick,” which was not the most efficient thing in the world.

According to Microsoft, they will now add microsecond and nanosecond values to the various date and time structures that exist.

Consider the following Microsoft example:

.NET 7 DateTime

namespace System {

public struct DateTime {

public DateTime(int year, int month, int day, int hour, int minute, int second, int millisecond, int microsecond);

public DateTime(int year, int month, int day, int hour, int minute, int second, int millisecond, int microsecond, System.DateTimeKind kind);

public DateTime(int year, int month, int day, int hour, int minute, int second, int millisecond, int microsecond, System.Globalization.Calendar calendar);

public int Microsecond { get; }

public int Nanosecond { get; }

public DateTime AddMicroseconds(double value);

}

}

.NET 7 TimeOnly

namespace System {

public struct TimeOnly {

public TimeOnly(int hour, int minute, int second, int millisecond, int microsecond);

public int Microsecond { get; }

public int Nanosecond { get; }

}

}

Cache with a Single Memory

With the AddMemoryCache API, you can now create a single memory cache and have it injected so that you can call GetCurrentStatistics. Consider the following Microsoft example:

// when using `services.AddMemoryCache(options => options.TrackStatistics = true);` to instantiate

[EventSource(Name = "Microsoft-Extensions-Caching-Memory")] internal sealed class CachingEventSource : EventSource { public CachingEventSource(IMemoryCache memoryCache) { _memoryCache = memoryCache; } protected override void OnEventCommand(EventCommandEventArgs command) { if (command.Command == EventCommand.Enable) { if (_cacheHitsCounter == null) { _cacheHitsCounter = new PollingCounter("cache-hits", this, () => _memoryCache.GetCurrentStatistics().CacheHits) { DisplayName = "Cache hits", }; } } } }

New Tar APIs

We will now have cross-platform APIs for extracting and modifying (reading and writing) tar archives. As is customary, Microsoft has provided examples, so here are a few:

.NET 7 Archive Tar API

// Generates a tar archive where all the entry names are prefixed by the root directory 'SourceDirectory' TarFile.CreateFromDirectory(sourceDirectoryName: "/home/dotnet/SourceDirectory/", destinationFileName: "/home/dotnet/destination.tar", includeBaseDirectory: true);

.NET 7 Extract Tar API

// Extracts the contents of a tar archive into the specified directory, but avoids overwriting anything found inside TarFile.ExtractToDirectory(sourceFileName: "/home/dotnet/destination.tar", destinationDirectoryName: "/home/dotnet/DestinationDirectory/", overwriteFiles: false);

Code fixer

Of course, you can’t have an Analyzer without a Code Fixer. Well, Microsoft tells us that the first of its two functions (for the time being, let’s keep waiting for more information) is in charge of suggesting RegexGenerator source generator methods with the option of overriding the name that comes by default.

The second function of this .NET 7 code fixer is that it replaces the original code with a call to the new OSR (On Stack Replacement).

OSR (On Stack Replacement) is an excellent addition to tiered compilation. It allows you to change the code that is being executed by the methods that are currently being executed while they are still being executed.

Microsoft claims that:

“OSR allows long-running methods to switch to more optimized versions in the middle of their execution, allowing the runtime to jit all methods quickly at first and then transition to more optimized versions when those methods are called frequently (via tiered compilation) or have long-running loops (via OSR).”

With OSR, we can gain up to 25% more startup speed (Avalonia IL Spy test), and TechEmpower claims that improvements can range from 10% to 30%.

Improved Regex source generator

With the new Regex Source Generator, you can save up to 5 times the time spent optimizing patterns in our compiled engine without sacrificing any of the associated performance benefits.

Furthermore, because it operates within a partial class, there is no compiling-time overhead for instances when users know their pattern at runtime. If your pattern is known at compile time, this generator is recommended instead of our traditional compiler-based approach.

All you have to do is create a partial declaration with an attribute called RegexGenerator that points back to the method that returns a precompiled regular expression object (with all other features enabled). Our generator will create that method and update it as needed based on changes made to either the original string or passed in options (such as case sensitivity, etc…).

Consider the following comparison of the Microsoft example:

Regex source generator for.NET 6

public class Foo

{

public Regex regex = new Regex(@"abc|def", RegexOptions.IgnoreCase);

public bool Bar(string input)

{

bool isMatch = regex.IsMatch(input);

// ..

}

}

.NET 6 Regex source generator

public partial class Foo // <-- Make the class a partial class

{

[RegexGenerator(@"abc|def", RegexOptions.IgnoreCase)] // <-- Add the RegexGenerator attribute and pass in your pattern and options

public static partial Regex MyRegex(); // <-- Declare the partial method, which will be implemented by the source generator

public bool Bar(string input)

{

bool isMatch = MyRegex().IsMatch(input); // <-- Use the generated engine by invoking the partial method.

// ..

}

}

Improvements made on SDK

Using dotnet new will be much easier for those who work with the .NET Framework. With major updates like improved intuitiveness and faster tab completion, it’s difficult to find anything negative about this change.

Added new command names

Command lines in general were changing — specifically, no longer will every command shown in this output include the —prefix, as it does now. This was done to match what a user would expect from a subcommand in a command-line app.

The old versions of these commands (—install, etc.) are still available in case they break scripts, but we hope that one day those commands will include deprecation warnings so you can transition over without risk.

Tab key completion

The dotnet command line interface has long supported tab completion on shells such as PowerShell, bash, zsh, and fish. When given input, the commands can choose what they want to display.

In .NET 7, the newcommand now supports a wide range of functions:

dotnet new angular

angular

blazorserver

web

blazorwasm

classlib

console

editorconfig

gitignore

globaljson

grpc

mstest

wpf

mvc

nugetconfig

nunit

nunit-test

page

page

razor

razorclasslib

/?

razorcomponent

react

reactredux

sln

tool-manifest

viewimports

viewstart

webapi

webapp

webconfig

winforms

winformscontrollib

winformslib

worker

wpfcustomcontrollib

wpflib

wpfusercontrollib

xunit

–help

-?

-h

/h

install

list

search

uninstall

update

If you want to know how to enable it, I recommend you read the Microsoft guide. Similarly, if you want to learn about all of the features available, I recommend returning to the original source.

Dynamic PGO improvements

Microsoft recently unveiled a new advancement in program optimization. The Dynamic PGO is intended to make some significant changes to the Static PGO that we are already familiar with. Whereas Static PGO requires developers to use special tools in addition to training, Dynamic PGO does not; all you need to do is run the application you want to optimize and then collect data for Microsoft!

According to Andy Ayers on GitHub, the following has been added:

“Extend local morph ref counting so that we can determine

single-definition, single-use locals.”

“Add a phase that runs immediately after local morph and attempts to

When single-def single-use local defs are present, they are forwarded to uses.

adjacent assertions.”

Better Performance System.Reflection

To begin with the performance enhancements of the new .NET 7 features, we have the enhancement of the System.Reflection namespace. The System.Reflection namespace is responsible for containing and storing types by metadata, to facilitate the retrieval of stored information from modules, members, assemblies, and other places.

They are mostly used for manipulating instances of loaded types, and they make it simple to create types dynamically.

Microsoft’s update has significantly reduced the overhead when invoking a member using reflection in.NET 7. In terms of numbers, the most recent Microsoft benchmark (created with the package BenchmarkDotNet) shows up to 3-4x faster performance.

using BenchmarkDotNet.Attributes;

using BenchmarkDotNet.Running;

using System.Reflection;

namespace ReflectionBenchmarks

{

internal class Program

{

static void Main(string[] args)

{

BenchmarkRunner.Run<InvokeTest>();

}

}

public class InvokeTest

{

private MethodInfo? _method;

private object[] _args = new object[1] { 42 };

[GlobalSetup]

public void Setup()

{

_method = typeof(InvokeTest).GetMethod(nameof(InvokeMe), BindingFlags.Public | BindingFlags.Static)!;

}

[Benchmark]

// *** This went from ~116ns to ~39ns or 3x (66%) faster.***

public void InvokeSimpleMethod() => _method!.Invoke(obj: null, new object[] { 42 });

[Benchmark]

// *** This went from ~106ns to ~26ns or 4x (75%) faster. ***

public void InvokeSimpleMethodWithCachedArgs() => _method!.Invoke(obj: null, _args);

public static int InvokeMe(int i) => i;

}

}

Recent Comments