C# String Concatenation in All Existing Ways

What is String Concatenation in C#?

Combining string objects in C# and .NET is a frequent operation called string concatenation. You can merge strings in different ways. String literals and constants are concatenated at compile time, not at run time. String variables are concatenated only at run time.

How to Concatenate Two Strings in C#

There are several methods for linking text data. It is important to choose the appropriate method depending on the specific use case. If you’re dealing with a lot of data, it’s best to use the StringBuilder class without creating multiple objects. However, if you are dealing with a few strings, the plus operator, Concat, Format or string interpolation may be sufficient.

Using the Concatenation Operator (+)

Here’s a code example with the plus sign:

string str1 = "Con"; string str2 = "Cat"; string concat = str1 + str2; Console.WriteLine(concat); // Output: ConCat concat += "enation" Console.WriteLine(concat); // Output: ConCatenation

The code declares two strings str1 and str2 with values “Con” and “Cat” respectively. The string.Concat method joins them and we get a new value containing “ConCat”. The resulting value is passed as an argument to the Console.WriteLine method, which prints a string to the console.

C# Concat Method

string str1 = "Con"; string str2 = "Cat"; string concat = string.Concat(str1, str2); Console.WriteLine(concat); // Output: ConCat

The code first declares two strings str1 and str2 with values “Con” and “Cat”, respectively. The string.Concat method joins them and we have a new value that contains “ConCat”. The resulting value is passed as an argument to the Console.WriteLine method, which outputs the string to the console.

The string.Concat method does not insert any separators or spaces between strings. In this case, the result will be “ConCat”, with the characters “C” and “C” capitalized as in the source strings.

To combine two char arrays in C#, you can use the Concat method from the System.Linq namespace. Here’s an example:

char[] arr1 = {'c', 'o', 'n'};

char[] arr2 = {'c', 'a', 't'};

char[] result = arr1.Concat(arr2).ToArray();

Console.WriteLine(result); // Output: con cat

First, we define two arrays char arr1 and arr2. We then use the Concat method and convert the result into a new char array using the ToArray method. The code returns an IEnumerable. The ToArray method is then used to convert the IEnumerable to char[].

Finally, we output the resulting char array, which is the combination of the two original arrays. The resulting char[] array is passed as an argument to the Console.WriteLine method, which outputs a string representation of the array.

Because char[] is an array of characters, the result will be a string consisting of the characters in the array, with no spaces or separators between them. In this case, the resulting string will be “con cat”, with a space between the characters ‘n’ and ‘c’.

C# Join Method

string[] words = { "Con", "Cat" };

string concat = string.Join(" ", words);

Console.WriteLine(concat); // // Output: Con Cat

The result of this code will be “Con Cat” with a space between the two words. Therefore, calling string.Join will join the two strings “Con” and “Cat” with a space between them, printing to the console “Con Cat”.

C# Format Method

string str1 = "Con";

string str2 = "Cat";

string concat = string.Format("{0}{1}", str1, str2);

Console.WriteLine(concat); // // Output: ConCat

In this code, two string variables str1 and str2 are defined and initialized with the values “Con” and “Cat”, respectively. The string.Format method is called, which takes a format string and zero or more arguments, and we have a new string. “{0}{1}” is the format string, which means “insert the first argument at position 0, and the second argument at position 1”.

The first argument is str1, which is inserted at position 0, and the second argument is str2, which is inserted at position 1. Therefore, the resulting formatted string is “ConCat”, which is then printed to the console using Console.WriteLine.

C# String Interpolation

string str1 = "Con";

string str2 = "Cat";

string concat = $"{str1}{str2}";

Console.WriteLine(concat); // // Output: ConCat

In this code, the same two string variables str1 and str2 are defined and initialized with the values “Con” and “Cat”, respectively. The expression is enclosed in curly braces and preceded by a dollar sign. The resulting string is assigned to the variable concat, which contains the value “ConCat”. Finally, the Console.WriteLine method is called to print the value of concat to the console, which outputs “ConCat”.

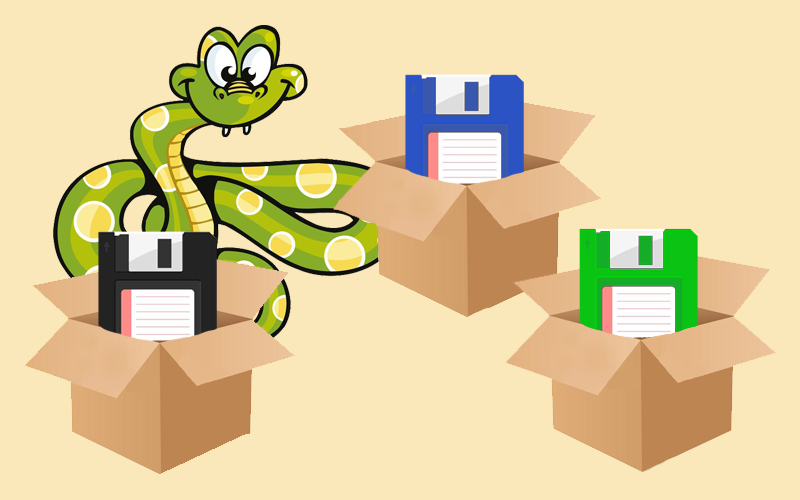

C# StringBuilder Class

StringBuilder sb = new StringBuilder();

sb.Append("Con");

sb.Append("Cat");

string concat = sb.ToString();

Console.WriteLine(concat); // // Output: ConCat

In this code, a StringBuilder object is created by calling its default constructor. StringBuilder allocates memory in a more efficient way than other chain methods. It’s recommended to use this class with a big number of strings.

Recent Comments