GTP-4 AI Language Model: Deep Dive Into Advanced Technology

All Information Regarding GPT-4

We live in unusual times, where each new model launch dramatically transforms the field of artificial intelligence. OpenAI unveiled DALLE2, a cutting-edge text-to-image model, in July 2022. Stability followed a few weeks later. Stable Diffusion, an open-source DALLE-2 variant, was introduced by AI. Both of these well-liked models have demonstrated promising outcomes in terms of both quality and capability to comprehend the prompt.

Whisper is an Automatic Speech Recognition (ASR) model that was just released by OpenAI. In terms of accuracy and robustness, it has fared better than any other model.

We can infer from the trend that OpenAI will release GPT-4 in the next months. Large language models are in high demand, and the success of GPT-3 has already shown that people anticipate GPT-4 to deliver better accuracy, compute optimization, lower biases, and increased safety.

Despite OpenAI’s silence regarding the release or features, we will make some assumptions and predictions about GPT-4 in this post based on AI trends and the data supplied by OpenAI. Additionally, we will study extensive language models and their uses.

What is GPT?

A text generation deep learning model called Generative Pre-trained Transformer (GPT) was learned using internet data. It is employed in conversation AI, machine translation, text summarization and classification.

By studying the Deep Learning in Python skill track, you can learn how to create your own deep learning model. You will learn about the principles of deep learning, the Tensorflow and Keras frameworks, and how to use Keras to create numerous input and output models.

GPT models have countless uses, and you can even fine-tune them using particular data to produce even better outcomes. You can cut expenditures on computing, time, and other resources by employing transformers.

Prior to GPT

Most Natural Language Processing (NLP) models have been trained for specific tasks like categorization, translation, etc. before GPT-1. Each of them was utilizing supervised learning. Two problems emerge with this form of learning: the absence of labeled data and the inability to generalize tasks.

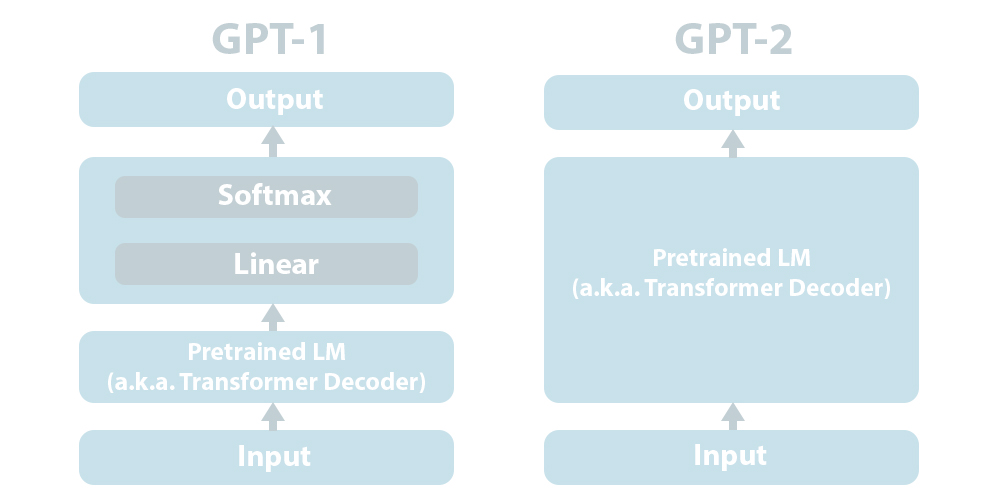

GPT-1

Improving Language Understanding by Generative Pre-Training, GPT-1 (117M parameters) paper, was released in 2018. It has suggested a generative language model that was honed for particular downstream tasks like classification and sentiment analysis and trained on unlabeled data.

GPT-2

Language Models are Unsupervised Multitask Learners, GPT-2 (1.5B parameters) paper, was released in 2019. To create an even more potent language model, it was trained on a larger dataset with more model parameters. To enhance model performance, GPT-2 employs task conditioning, zero-shot learning, and zero short task transfer.

GPT-3

Language Models are Few-Shot Learners, GPT-3 (175B parameters) study, was released in 2020. Compared to GPT-2, the model contains 100 times more parameters. In order to perform well on jobs down the road, it was trained on an even larger dataset. With its human-like tale authoring, SQL queries, Python scripts, language translation, and summarizing, it has astounded the world. Utilizing in-context learning, few-shot, one-shot, and zero-shot settings, it has produced a state-of-the-art outcome.

What’s New in GPT-4?

Sam Altman, the CEO of OpenAI, confirmed the reports regarding the introduction of the GPT-4 model during the question-and-answer portion of the AC10 online meetup. This section will make predictions about the model size, optimal parameter and computation, multimodality, sparsity, and performance utilizing that data in conjunction with current trends.

Model Size

Altman predicts that GPT-4 won’t be significantly larger than GPT-3. Therefore, we can assume that it will have parameters between 175B and 280B, similar to Deepmind’s Gopher language model.

With 530B parameters, the large model Megatron NLG is three times bigger than GPT-3 yet performs comparably. Higher performance levels were attained by the subsequent smaller model. Simply put, more size does not equate to better performance.

According to Altman, they are concentrating on improving the performance of smaller models. It was necessary to use a massive dataset, a lot of processing power, and a complicated implementation for the vast language models. For many businesses, even installing huge models is no longer cost-effective.

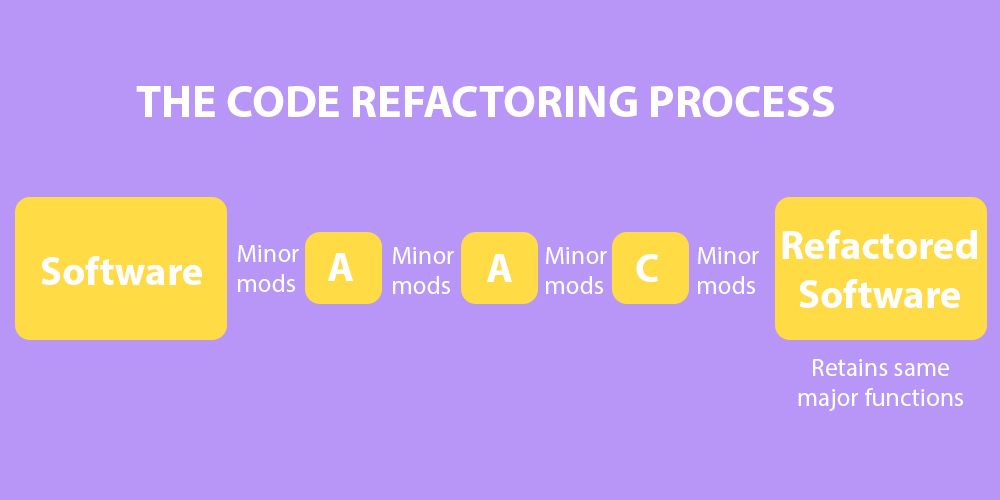

Ideal parameterization

Large models are typically not optimized enough. Companies must choose between accuracy and cost because training the model is costly. For instance, GPT-3 was only trained once, despite mistakes. Researchers were unable to do hyperparameter tuning due to prohibitive prices.

It has been demonstrated by Microsoft and OpenAI that GPT-3 could be enhanced with the use of appropriate hyperparameter training. According to the results, a 6.7B GPT-3 model with tuned hyperparameters improved performance by the same amount as a 13B GPT-3 model.

The best hyperparameters for the larger models with the same architecture are the same as the best for the smaller ones, according to a novel parameterization theory (P). It has made it much more affordable for academics to optimize big models.

Ideal computation

Microsoft and OpenAI demonstrated last month that GPT-3 might be enhanced further if the model was trained with the ideal hyperparameters. They discovered that a 6.7B version of GPT-3 significantly improved its performance to the point where it was on par with the model’s initial 13B performance. For smaller models, hyperparameter optimization produced a performance boost equivalent to doubling the number of parameters. The best hyperparameters for a small model were also the best for a larger one in the same family, according to a new parameterization they discovered (called P). They were able to optimize models of any size using P for a tiny portion of the cost of training. The larger model can then almost instantly get the hyperparameters.

Models with optimal computing

Recently, DeepMind went back to Kaplan’s research and discovered that, in contrast to popular belief, the amount of training tokens had just as much of an impact on performance as model size. They came to the conclusion that more computing budget should be distributed equally between scaling parameters and data. By training Chinchilla, a 70B model (four times smaller than Gopher, the previous SOTA), with four times as much data as all major language models since GPT-3 (1.4T tokens, as opposed to the average 300B), they were able to demonstrate their hypothesis. The outcomes were unmistakable. Across a variety of language benchmarks, Chinchilla outperformed Gopher, GPT-3, MT-NLG, and all other language models “uniformly and significantly”: Models today are big and undertrained.

Given that GPT-4 will be slightly bigger than GPT-3, it would require about 5 trillion training tokens to be compute-optimal, according to DeepMind’s findings. This is an order of magnitude more training tokens than are now available. Using Gopher’s compute budget as a proxy, they would need between 10 and 20 times more FLOPs to train the model than they used for GPT-3 in order to achieve the lowest training loss. When he indicated in the Q&A that GPT-4 will need a lot more computing than GPT-3, Altman might have been alluding to this.

Although the extent to which OpenAI will incorporate optimality-related findings into GPT-4 is unknown due to their funding, it is certain that they will do so. They’ll undoubtedly concentrate on optimizing factors other than model size, that much is certain. Finding the ideal compute model size, a number of parameters and a collection of hyperparameters could lead to astounding improvements in all benchmarks. If these methods are merged into a single model, then all forecasts for language models will be wrong.

Altman added that if models weren’t made larger, people wouldn’t believe how wonderful they could be. He might be implying that scaling initiatives have ended for the time being.

GPT-4 will only be available in text

Multimodal models are the deep learning of the future. Because we inhabit a multimodal world, human brains are multisensory. AI’s ability to explore or comprehend the world is severely constrained by only perceiving it in one mode at a time.

However, creating effective multimodal models is much more difficult than creating an effective language- or vision-only models. It is difficult to combine visual and verbal information into a single representation. We have very little understanding of how the brain functions, thus we are unsure of how to implement it in neural networks (not that the deep learning community is taking into account insights from the cognitive sciences on brain anatomy and operation). In the Q&A, Altman stated that GPT-4 will be a text-only model rather than multimodal (like DALLE or MUM). Before moving on to the next iteration of multimodal AI, I’m going to venture a guess that they’re trying to push language models to their breaking point.

Sparsity

Recent research has achieved considerable success with sparse models that use conditional computing to process various input types utilizing various model components. A seemingly orthogonal relationship between model size and compute budget, is produced by these models, which scale beyond the 1T-parameter threshold without incurring significant computational expenses. On very large models however the advantages of MoE techniques are reduced.

It makes sense to assume that GPT-4 will also be a dense model given the history of OpenAI’s emphasis on dense language models. Altman also stated that GPT-4 won’t be significantly bigger than GPT-3, therefore we may infer that sparsity is not an option for OpenAI at this time.

Given that our brain, which served as the inspiration for AI, significantly relies on sparse processing, sparsity, similar to multimodality, will most certainly predominate the future generations of neural networks.

AI positioning

The AI alignment problem, which is how to get language models to follow our intents and uphold our ideals, has been the focus of a lot of work at OpenAI. It’s a challenging subject both theoretically (how can we make AI grasp what we want precisely?) and philosophically (there isn’t a single way to make AI align with humans because human values vary greatly between groups and are sometimes at odds with one another).

InstructGPT, a redeveloped GPT-3 taught with human feedback to learn to follow instructions (whether those instructions are well-intended or not is not yet integrated into the models), is how they made their initial effort.

The primary innovation of InstructGPT is that, despite its performance on language benchmarks, it is rated as a better model by human judges (who are made up of of English speakers and OpenAI staff, so we should be cautious when drawing generalizations). This emphasizes the need to move past using benchmarks as the sole criteria to judge AI’s aptitude. Perhaps even more crucial than the models themselves is how humans interpret them.

Given Altman and OpenAI’s dedication to creating a useful AGI, I do not doubt that GPT-4 will put the information they gathered from InstructGPT to use and expand upon.

Given that it was only available to OpenAI personnel and English-speaking labelers, they will improve the alignment process. True alignment should involve individuals and groups with various backgrounds and characteristics in terms of gender, ethnicity, nationality, religion, etc. Any progress made in that direction is appreciated, though we should be careful not to refer to it as alignment since most people don’t experience it that way.

Conclusion

Model size: GPT-4 will be slightly larger than GPT-3 but not by much when compared to the largest models currently available (MT-NLG 530B and PaLM 540B). Model size won’t be a defining characteristic.

Ideally, GPT-4 will consume greater processing power than GPT-3. It will apply fresh optimality ideas to scaling rules and parameterization (optimal hyperparameters) (the number of training tokens is as important as model size).

Multimodality: The GPT-4 model will solely use text (not multimodal). Before switching entirely to multimodal models like DALLE, which they believe will eventually outperform unimodal systems, OpenAI wants to fully utilize language models.

Sparsity: GPT-4 will be a dense model, continuing the trend from GPT-2 and GPT-3 (all parameters will be in use to process any given input). In the future, sparsity will predominate more.

GPT-4 will be more in line with us than GPT-3 in terms of alignment. It will apply the lessons learned from InstructGPT, which was developed with human input. However, there is still a long way to go before AI alignment, so efforts should be carefully considered and not overstated.

Recent Comments