Enterprise Data Strategy Roadmap: Which Model to Choose and Follow

Data Strategy Roadmap

A Data Strategy roadmap is a step-by-step plan for transforming a company from its existing condition to the desired business organization. It is a more extensive, researched version of the Data Strategy that specifies when and how certain improvements to develop and upgrade a business’s data processes should be implemented. A Data Strategy roadmap can help an organization’s processes align with its targeted business goals.

A good roadmap will align the many solutions utilized to update an organization and assist in the establishment of a solid corporate foundation.

The removal of chaos and confusion is a big benefit of having a Data Strategy plan. During the change process, time, money, and resources will be saved. The roadmap can also be utilized as a tool for communicating plans to stakeholders, personnel, and management. A decent road map should include the following items:

- Specific objectives: A list of what should be completed by the end of this project.

- The people: A breakdown of who will be in charge of each step of the process.

- Timeline: A plan for completing each phase or project. There should be an understanding of what comes first.

- The funding required for each phase of the Data Strategy.

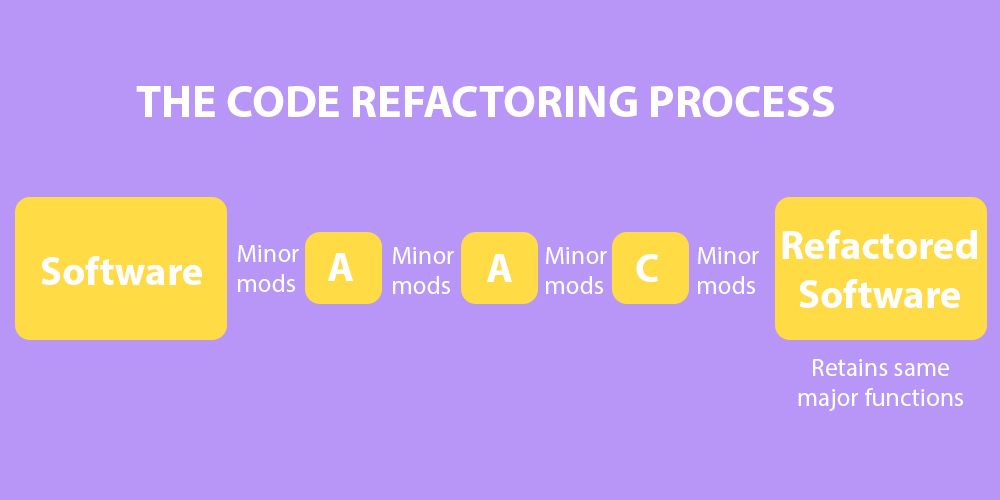

- The software: A description of the software required to meet the precise goals outlined in the Data Strategy roadmap.

Why Do You Need a Data Strategy Roadmap?

It’s nearly hard to arrive at your end goal if you don’t know where you’re going. Creating a data strategy can assist in breaking down the huge picture into manageable parts. With a roadmap in hand, you’ll always know how far you’ve progressed and whether you’re on schedule.

When developing a data strategy, it is critical to consider all areas of implementation. If you try the monolithic method, you will quickly run out of steam. It’s a good idea to prepare a detailed plan including everything from the project’s scope to its cost before you start any data-related projects.

A data strategy roadmap will allow you to explain to stakeholders and management what you anticipate to achieve. It will also serve as a convenient reference point for any future data initiatives.

Roadmaps can help you envision your approach and keep your entire organization focused on your objectives. Implementing a modern data and analytics platform will improve your organization’s data-driven decision-making process and can assist in transforming big data from a buzzword to a valuable business asset. Just getting started requires a solid understanding of your organization’s goals and the resources needed to achieve them.

Other components of the Data Strategy Roadmap include:

- The Business Case – Create a business case for implementing a data strategy.

- Data Governance Strategy – Create an efficient governance system for managing data assets.

- Data Management Strategy – Determine the resources required to manage the data.

- Data Quality Assurance Plan – Define best practices for maintaining high data standards for accurate reporting.

- Plan for Data Analytics – Create a procedure for analyzing data to support decision-making.

Case Study In Business

One of the most important aspects of a data strategy roadmap is defining the business case for a contemporary data platform. The following elements should be included in your business case:

- What issue does a contemporary data platform address?

- Are there any extra charges related with the data platform?

- How much does the total cost of ownership (TCO) cost?

- What is the expected return on investment (ROI)?

- What is the deployment timeline?

- Do we have enough money to finish the project?

Plan For Data Goverance

A solid data governance strategy serves as the foundation for a strong data strategy. You should create a structure for managing your organization’s data assets. You’ll also need to define roles and responsibilities, determine who owns what data, locate the data, and develop policies and procedures for accessing and using the data.

The following aspects should be included in a strong data governance plan:

- Roles and responsibilities – Explain who will have access to the data, how they will access it, and who will supervise their activities.

- Data asset ownership – Determine who owns each piece of data and how it will be utilized.

- Data location – Specify where the data will remain and how it will be accessed.

Plan For Data Management

After you’ve chosen the breadth of your data strategy and the sorts of data you’ll employ, you must select how you’ll manage the data. The data management plan specifies the tools and processes to be used in data management. The following are some important concerns:

- Data management resources – Make a list of the resources needed to manage the data. Hardware, software, people, and training may all be included.

- Data classification – Determine the various sorts of data that will be stored. Structured data, such as financial records, and unstructured data, such as emails and text documents, are two examples.

- Storage options – Select the storage option that best meets your requirements.

Plan For Data Quality Assurance

The data quality assurance strategy specifies the methods and mechanisms that will be utilized to guarantee that the data fulfills your requirements. The following elements are included in a data quality assurance plan:

- Requirements identification – Describe the standards that must be met before the data can be shared.

- Metrics definition – Define the metrics that will be used to assess the performance of the data quality program.

- Data testing methodology – Outline the data testing process.

- Reporting of results – Report the test results.

- Process monitoring entails tracking the progress of the data quality program and reporting back to stakeholders.

Plan For Data Analysis

The analytical approaches used to analyze the data are detailed in the data analytics plan. The aspects of an analytics plan are as follows:

- Process and approach — Your analytical process, from prioritizing to guided navigation to self-service analytics.

- Data preparation – Describe the actions taken prior to evaluating the data.

- Define the scenarios that will drive the analytical methodologies using use cases.

- Describe the business rules and data models that have been applied to the data.

- Presentation – Explain how the analytics presentation will be used internally and externally.

In conclusion

A data strategy is a plan that describes how businesses will use data to achieve specified business goals. It establishes expectations and provides a clear sense of direction. Creating a data strategy roadmap is a useful tool for assisting with strategy implementation.

Having these expectations outlined in a roadmap engages the entire organization in the journey. This is important for a variety of reasons, the most important of which is that data consumers fully understand the cultural transformation required to become a data-informed organization.

Recent Comments