Instructions for Setting Up HTTPS and SSL Using Azure

How to use HTTPS with Azure and install SSL

HTTPS (Hypertext Transfer Protocol Secure) is a secure version of the HTTP protocol used for transmitting data over the internet. It encrypts communication between a website and its visitors to protect sensitive data from being intercepted. In today’s internet landscape, it is becoming increasingly important for website owners to use HTTPS to secure their websites and protect their visitors’ data.

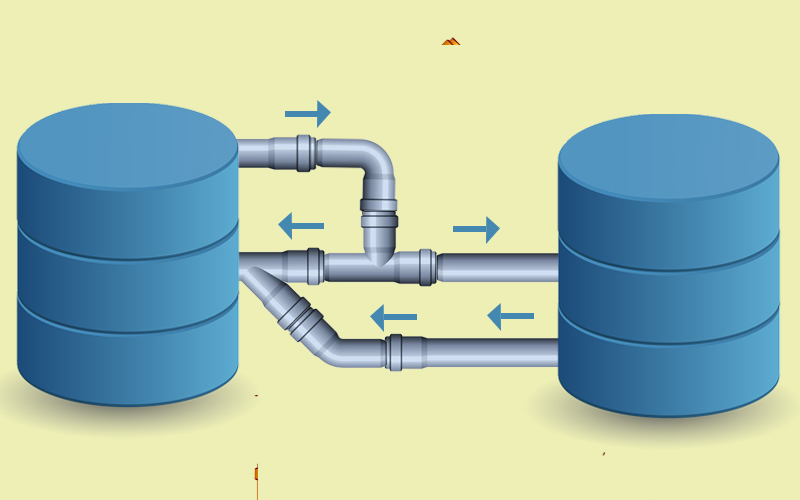

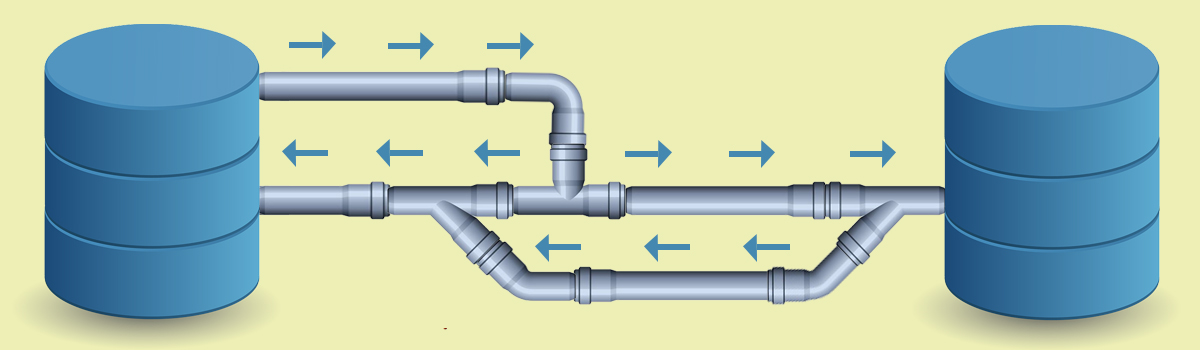

HTTPS connection with Azure

One way to implement HTTPS on a website is through Azure, a cloud computing platform and infrastructure created by Microsoft. Azure offers a range of tools and services that can be used to secure and manage a website, including options for implementing HTTPS.

There are several ways to enable HTTPS on a website hosted on Azure. One option is to use Azure App Service, which is a platform-as-a-service (PaaS) offering that allows developers to build and host web applications. With Azure App Service, website owners can enable HTTPS by simply turning on the “HTTPS Only” option in the App Service configuration.

Instructions for setting up HTTPS with Azure App Service

To enable HTTPS with Azure App Service, follow these steps:

- Navigate to the App Service page in the Azure portal.

- Select the app for which you want to enable HTTPS.

- In the left-hand menu, click on “SSL certificates.”

- Click on the “HTTPS Only” option.

- Click on the “Save” button to apply the changes.

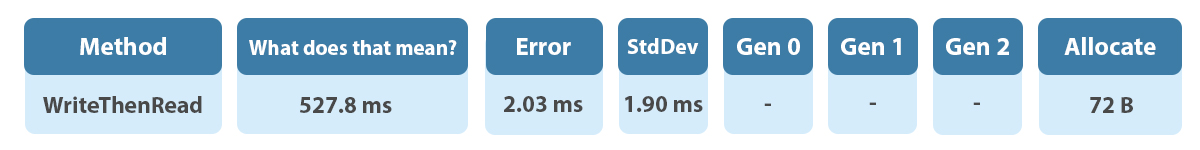

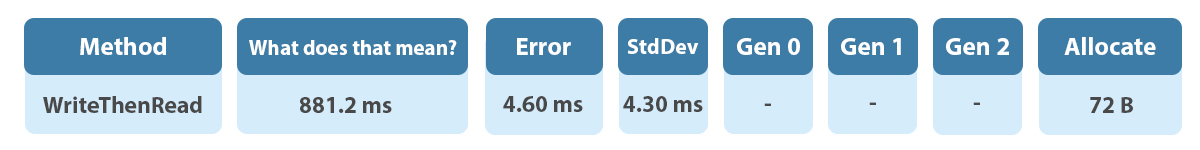

Once HTTPS is enabled for an app, Azure App Service will automatically provision and bind a certificate to the app. This certificate will be valid for one year and will be renewed automatically by Azure App Service.

HTTPS with Azure CDN and SSL certificate

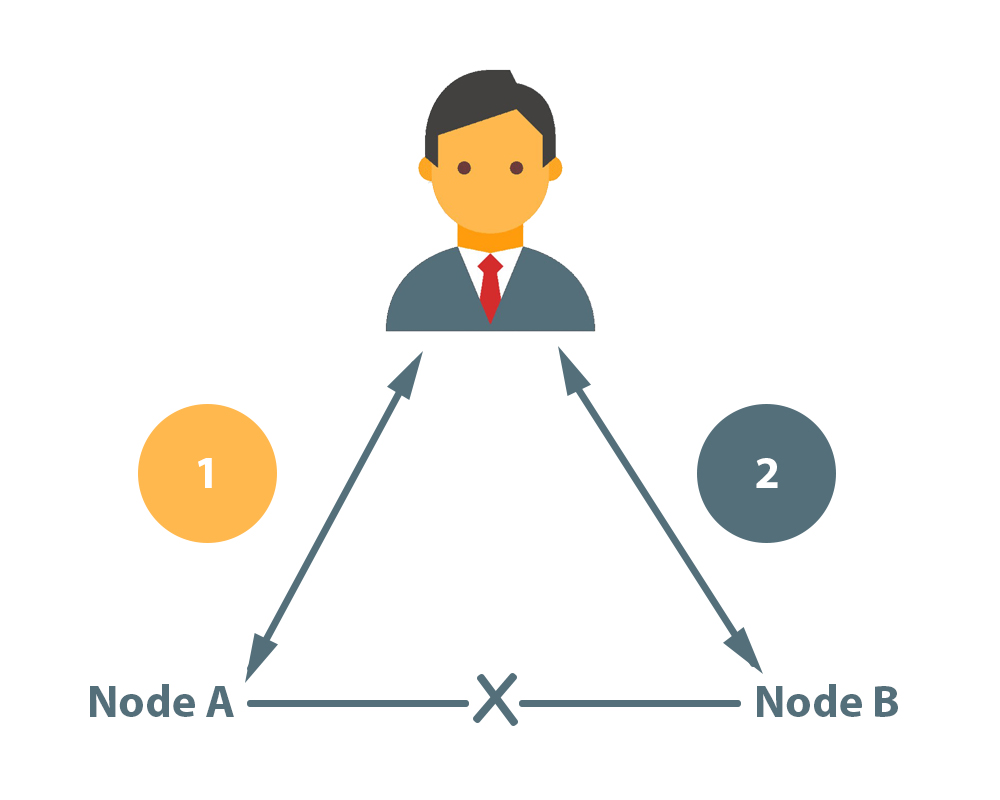

Another option for enabling HTTPS on a website hosted on Azure is to use Azure CDN (Content Delivery Network), which is a global network of edge nodes that helps to deliver content faster and more reliably to users. With Azure CDN, website owners can enable HTTPS by creating a custom domain and purchasing a SSL/TLS certificate. The certificate can then be configured to work with Azure CDN by following the steps in the Azure documentation.

Instructions for setting up HTTPS with Azure CDN

To enable HTTPS with Azure CDN, follow these steps:

- Navigate to the Azure CDN page in the Azure portal.

- Select the CDN profile for which you want to enable HTTPS.

- In the left-hand menu, click on “Custom domains.”

- Click on the “Add custom domain” button.

- Enter your custom domain name and select the desired protocol (HTTP or HTTPS).

- Click on the “Validate” button to verify that you own the domain.

- Once the domain is validated, click on the “Add” button to add the custom domain to your CDN profile.

- Click on the “Add binding” button to bind the custom domain to your CDN endpoint.

- In the “Add binding” window, select the custom domain and the desired protocol (HTTP or HTTPS).

- Click on the “Add” button to add the binding.

After the custom domain is added and bound to the CDN endpoint, website owners can purchase a SSL/TLS certificate and configure it to work with their custom domain. This can be done through Azure or through a third-party provider. Once the certificate is configured, website owners can enable HTTPS by turning on the “HTTPS Only” option in the CDN endpoint configuration.

In addition to these options, Azure also offers other tools and services that can be used to secure a website and enable HTTPS, such as Azure Traffic Manager and Azure Key Vault. These tools can be used to manage and secure traffic to a website.

Installing SSL and Azure

After the custom domain is added and bound to the CDN endpoint, website owners can purchase a SSL/TLS certificate and configure it to work with their custom domain. This can be done through Azure or through a third-party provider. Once the certificate is configured, website owners can enable HTTPS by turning on the “HTTPS Only” option in the CDN endpoint configuration.

In addition to these options, Azure also offers other tools and services that can be used to secure a website and enable HTTPS, such as Azure Traffic Manager and Azure Key Vault. These tools can be used to manage and secure traffic to a website.

Conclusion

This article will show you how to enable HTTPS in Azure web applications using Azure App Service. We also discussed some best practices and its up to you to choose which one should you use.

Recent Comments