Natural Language Processing (NLP) in Python – PC Can Recognise Your Speech | KoderShop

Natural Language Processing in Python and its explanation

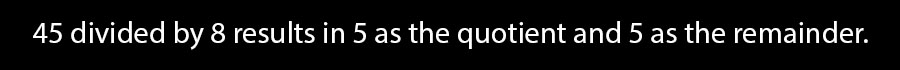

For computers it is not very clear to interpret unstructured information, such as human speaking or writing. But they are great at dealing with structured information, such as tables in databases. So that is where Natural Language Processing in Python comes in. Its goal is to make computers understand the unstructured text and regain the meaningful information from it. Unfortunately, computers cannot fully understand human language, but natural language coding can save a huge amount of time. Shortly, it is the subfield of AI (Artificial Intelligence) which interacts between humans and computers. With Python NLP developers can systematize and structure data to perform tasks such as translation, tonality analysis, speech recognition, and more. Python is probably the most convenient programming language for NLP. Programming languages such as Java and R are also used for NLP, but Python is clearly used more frequently. Natural Language Processing Python examples are Cortana, Siri, Alexa and others.Rule-based NLP vs. Statistical NLP:

Rule-based NLP system relies on built-in linguistic rules. It analyzes text and creates characterization from where we can generate the text. A large set of rules is required for our actions. The software needs a complex set of rules, so after that it can transfer the grammatical correction from source language into targeted language. Actually, the translations need gigantic dictionaries. And users can add their own terminology so they can improve the stock translation quality.Statistical system uses NLP machine learning Python algorithms. It utilizes statistical translation models whose parameters result from the analysis of corpora. It is fine to use large and qualified corpora, because statistical NLP provides good quality. This type of Natural Language Python is CPU intensive and requires an extensive hardware configuration.

Natural Language Understanding Python

Natural language understanding is one of the branches of AI that is needed to understand the input in the form of text or speech.

NLU helps humans to interact with computers. It is the ability to understand commands without special syntax of computer languages such as Python or C++. NLU also can make computers communicate back to the people in their languages, for example English, Spanish and French.

You can see inclusion of NLU in chat- and voice- bots that interact with people without any supervision. A lot of IT companies, such as Amazon, Apple, Google and Microsoft, successfully use NLU in their projects.

Natural Language Generation Python

When you have a data set and you want to produce written or spoken stories from it, the Natural language generation is your choice. The NLG is used for the ability that humans can interact with machines and machines can interact with humans, including natural language processing (NLP) and natural language understanding (NLU).

Natural Language Generation has a really fast speed of production so it can be especially useful for news production or other stories that are time-sensitive.

Natural Languages Processing Python packages (Libraries)

In Python there is the NLTK (Natural Language Toolkit) package that you can use for Python Natural Language Processing. NLTK is a free, open source project. NLTK is the main tool for natural language processing and machine learning. The command below can help you install it.

$ python -m pip install nltk == 3.5

SpaCy is relatively young and very popular now. Its GitHub repository has over 22.4k stars and 3.7k branches, which is much higher than NLTK. It was written in Python and Cython, making it fast and efficient when working with large datasets. This is an industrial library designed for use in production.

Some features of spaCy:

- It provides support for linguistically motivated tokenization in over 60 languages.

- It has 64 pipelines in 19 different languages.

- It provides functions for named entity recognition, part-of-speech tagging, dependency analysis, sentence segmentation, text classification, lemmatization, morphological analysis, entity linking, and more.

Natural Language Processing algorithms list:

Tokenization

One of the most popular NLP techniques in Python is tokenization. This method allows you to divide the text into so-called tokens, into words or sentences. The long string line is broken in smaller parts that are named “tokens”(where the “tokenization” name comes from) and they constitute symbols, numbers, etc. Also, they help people understand what text means when developing NLP models Python. Tokenizers use empty symbols to separate tokens one from another. There are some challenges and limitations in tokenization tasks. Generally, tokenization is used only for text in French or English languages. But for example for Chinese, Japanese, Korean, Thai, Urdu and etc. it doesn’t work. So the first limitation is that you need to develop a tool that combines these languages. Despite this there are also other languages where tokenization is limited. For instance, Arabic text. Arabic language contains complicated morphology, for example: one Arabic word may contain up to seven tokens.NLP Implementation (Tokenization):

import nltk phrase = "I will go for a walk tomorrow so I hope the weather will be good." nltk_tokens = nltk.word_tokenize(phrase) print (nltk_tokens) # output: ['I', 'will', 'go', 'for', 'a' 'walk', 'tomorrow', 'so', 'I', 'hope', 'the' 'weather', 'will', 'be' 'fine']

Stemming

The process that reduces inflection in words to their base form is called stemming. It is rule-based. It reduces a word to its stem word, which is created by removing the suffixes or prefixes used with a word. For example, a stemming algorithm truncates the words “feathers” and “feathered” to the root word “feather”. The two biggest problems in stemming are Overstemming and Understemming. When there are words that stem from the same root and that are of different stems, the overstemming is occured. Overstemming comes from when several words are stemmed from the same root that are of different stems. Understemming comes from when several words are stemmed from the same root that are not of different stems.

Python Implements Stemming:

import nltk

nltk.download('wordnet')

nltk.download('stopwords')

nltk.download('punkt')

from nltk.tokenize import word_tokenize

from nltk.stem import PorterStemmer

stemmer = PorterStemmer()

phrase = "watching the films"

words = word_tokenize(phrase)

stemmed_words = []

for word in words:

stemmed_words.append(stemmer.stem(word))

Lemmatization

Lemmatization finds the dictionary word instead of reducing the original word. It allows you to bring the word form to the lemma (base form). Also you can add custom rules for analyzing words. It is completed by searching the forms in the table. The main difference between lemmatization and stemming is that the first one has regard to the context and converts the word to its root form, while the second one removes the last few characters.Lemmatization NLP code:

import nltk

nltk.download('omw-1.4')

from nltk.stem import WordNetLemmatizer

lemmatizer = WordNetLemmatizer()

phrase = "watching the films"

words = word_tokenize(phrase)

lemmatized_wo

for word in words:

lemmatized_words.append(lemmatizer.lemmatize(word))

" ".join(lemmatized_words)

# output: watching the film

Named Entity Recognition (NER)

Named entity recognition (NER) can seek to locate and classify named entities in text like people, organizations, locations, date, time, percentages, etc. This process is one of the most used NLP algorithms Python.NER NLP Python example:

import nltk

nltk.download('punkt') nltk.download('averaged_perceptron_tagger') nltk.download('maxent_ne_chunker') phrase = "One of the most popular physicists was Albert Einstein and he was born in Germany." for sent in nltk.sent_tokenize(phrase): for chunk in nltk.ne_chunk(nltk.pos_tag(nltk.word_tokenize(sent))): if hasattr(chunk, 'label'): print(chunk.label(), ' '.join(a[0] for a in chunk)) # output: PERSON Albert Einstein # GPE Germany

Named Entity Recognition (NER)

Named entity recognition (NER) can seek to locate and classify named entities in text like people, organizations, locations, date, time, percentages, etc. This process is one of the most used NLP algorithms Python.NER NLP Python example:

import nltk

phrase = "The weather is good today."

tokens = nltk.word_tokenize(phrase)

print(tagged)

# output: [('The', 'DT'), ('weather', 'NN'), ('is', 'VBZ'), ('good', 'JJ'), ('today', 'NN'), ('.', '.')]

Stop word removal

The preprocessing steps across NLP Python applications that are very often used are Stop word Removal. Stop word removal is removing words that frequently chance across all files in the whole text. The most common Stop words are articles and pronouns. These words have no meaning in some of the tasks in Python language processing such as searching for information. In reverse, removal of the stop words can have little impact in these NLP techniques Python. Mostly, the given language list of the stop words is a hand-picked words list that most often occurs in a collection of written texts.Python Implementation (Stop Word Removal):

from nltk.tokenize import word_tokenize

from nltk.corpus import stopwords

stop_words = stopwords.words('english')

phrase = "Here is an example sentence"

words = word_tokenize(phrase)

stripped_phrase = []

for word in words:

if word not in stop_words:

stripped_phrase.append(word)

" ".join(stripped_phrase)

# output: Here example sentence

Sentiment Analysis

There are a lot of utilities contained in the NLTK library that help you analyze data. Sentiment analysis is one of them.

Sentiment analysis is a NLP technique that identifies the disposition of a given text. There are different kinds of sentiment analysis, but one of the most commonly used techniques divides data into positive, negative and neutral. You can analyze comments, and product reviews, to gain understanding from your audience.

TF – IDF

If you want to know how important a word is to a document in a collection of documents, you can use TF-IDF (term frequency-inverse document frequency). It is a static measurement that evaluates the word relevantion. It functions by multiplying two indicators: the amount of times the word appears in a document and the inverse frequency of the word in selection of documents. It is useful for assessment of words for Natural Language Processing with Python in machine learning algorithms. It works by proportionally increasing the number of times a word appears in a document, but is offset by the number of documents that contain the word. Therefore, words that occur frequently in each document, such as this, what, and if, are ranked low, even though they may appear many times, because they are not particularly relevant to that document.

Recent Comments